- #AIRFLOW SCHEDULER AUTO RESTART HOW TO#

- #AIRFLOW SCHEDULER AUTO RESTART PASSWORD#

- #AIRFLOW SCHEDULER AUTO RESTART DOWNLOAD#

Note that not all of them are used in this blog post. In this way, the template files are created in the current path with a bunch of files and directories. root/.cache/helm/repository/airflow-1.5.0.tgz

#AIRFLOW SCHEDULER AUTO RESTART DOWNLOAD#

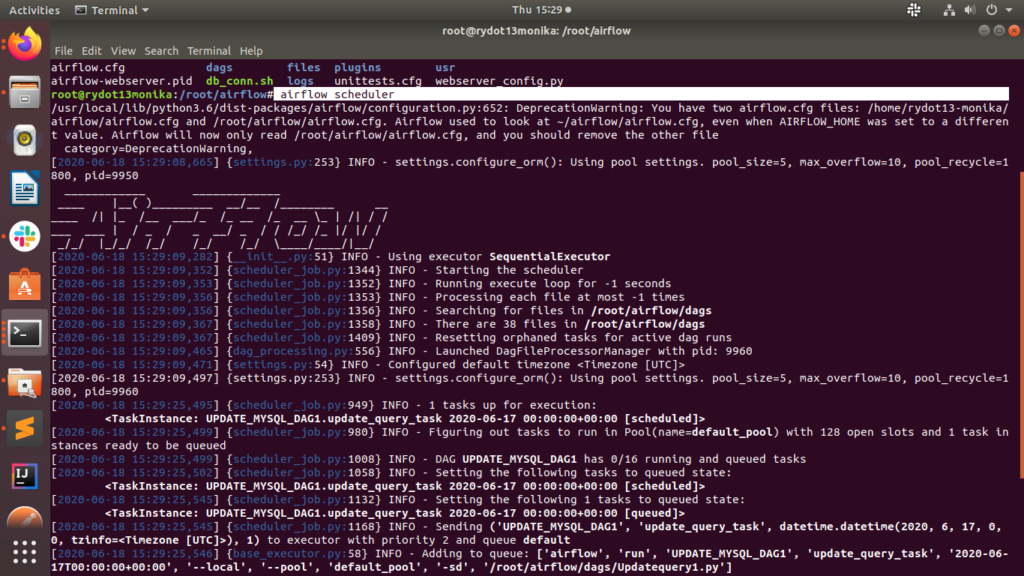

To do so for our Airflow instance, we can use the following commands to define the Airflow repository, download the Helm chart locally, and finally create the template YAML files.

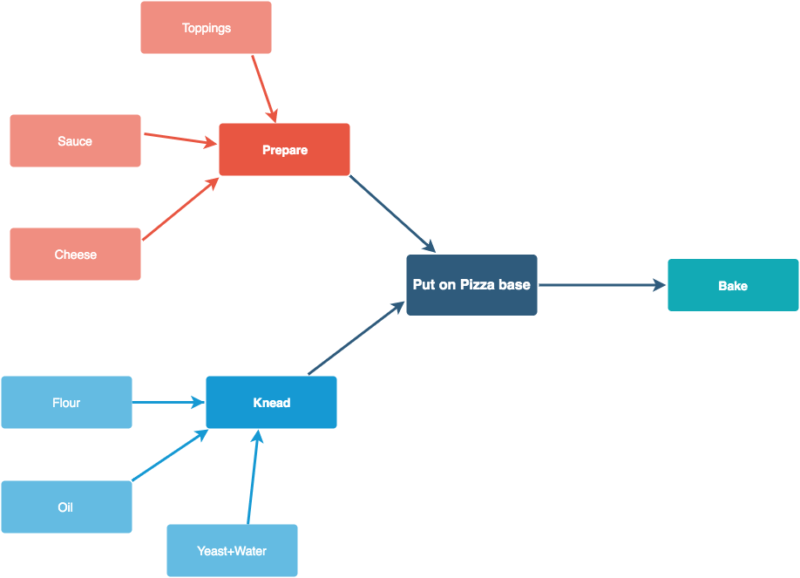

Once downloaded, the files can be adapted to our specific needs, in order to reflect the desired application deployment. Usually, when creating such files, we can download templates from the Helm charts repository using the Helm package manager. So, as we said, the first thing to do to prepare our Airflow environment on Kubernetes is to prepare YAML files for the deployment of the required resources. In the picture below, all the main blocks of the deployment are shown: The scheduler runs the task instances in the structured order defined by what is called DAG, and the webserver is the web UI to monitor all the scheduled workflows. These are the two main components of the Airflow platform.

#AIRFLOW SCHEDULER AUTO RESTART PASSWORD#

First, we will need to create a Configmap, to configure the database username and password then, a Persistent Volume and a Claim, to store the data on a physical disk finally, a Postgres service resource, to expose the database connection to the Airflow Scheduler.įinally, we will be able to deploy the actual Airflow components: the Airflow scheduler and the Airflow webserver.

#AIRFLOW SCHEDULER AUTO RESTART HOW TO#

Then we will need a Postgres database: Airflow uses an external database to store metadata about running workflows and their tasks, so we will also show how to deploy Postgres on top of the same Kubernetes cluster where we want to run Airflow. K8s resources define how and where Airflow should store data ( Persistent Volumes and Claims, Storage class resources), and how to assign an identity – and the required permissions – to the deployed Airflow service ( Service account, Role, and Role binding resources). The first items that we will need to create are the Kubernetes resources.

To do so, we will need to create and initialise a set of auxiliary resources using YAML configuration files. In this blog series, we will dive deep into Airflow: first, we will show you how to create the essential Kubernetes resources to be able to deploy Apache Airflow on two nodes of the Kubernetes cluster (the installation of the K8s cluster is not in the scope of this article, but if you need help with that, you can check out this blog post!) then, in the second part of the series, we will develop an Airflow DAG file (workflow) and deploy it on the previously installed Airflow service on top of Kubernetes.Īs mentioned above, the objective of this article is to demonstrate how to deploy Airflow on a K8s cluster. This enables users to dynamically create Airflow workers and executors whenever and wherever they need more power, optimising the utilisation of available resources (and the associated costs!). In today’s technological landscape, where resources are precious and often spread thinly across different elements of an enterprise architecture, Airflow also offers scalability and dynamic pipeline generation, by being able to run on top of Kubernetes clusters, allowing us to automatically spin up the workers inside Kubernetes containers. On top of this, it also offers an integrated web UI where users can create, manage and observe workflows and their completion status, ensuring observability and reliability. Airflow is an open-source platform which can help companies to monitor and schedule their daily processes, able to programmatically author, schedule and monitor workflows using Python, and it can be integrated with the most well-known cloud and on-premise systems which provide data storage or data processing. To obtain better control and visibility of what is going on in the environments where these processes are executed, there needs to be a controlling mechanism, usually called a scheduler. These days, data-driven companies usually have a huge number of workflows and tasks running in their environments: these are automated processes which are supporting daily operations and activities for most of their departments, and include a wide variety of tasks, from simple file transfers to complex ETL workloads or infrastructure provisioning.

0 kommentar(er)

0 kommentar(er)